Data batches filled with contracts, customer feedback, invoices, multimedia files – all of that will take human hours or even days to process. Luckily, though, recent years have seen the development of advanced AI solutions that can do the same job with almost the same accuracy within seconds.

That’s why it’s not surprising to witness the Intelligent Document Processing (IDP) market grow exponentially: its revenue is projected to increase over the next decade at a CAGR of 28.9%.

But how exactly does AI document processing work? And what are the nuances of implementing it successfully? In this article, we’re answering these questions and more – this is our ultimate guide to AI document ingestion and processing.

What is Document Ingestion?

Document ingestion refers to the acquisition, preparation, and transformation of raw data (spreadsheets, images, PDFs, chat logs, etc.) into a format usable for downstream applications. With the rise of more sophisticated AI solutions like Wippy, ingestion systems today are built to accommodate increasingly diverse inputs, including multimodal formats like text paired with images or video.

Why is document ingestion important? There are many ways in which it helps businesses across many industries, for example:

- Financial Institutions: A bank processing thousands of mortgage applications faces diverse file types, including scanned IDs, pay stubs, and digital signatures. AI ingestion systems identify key information (borrower names, income details, and tax records) and feed this data into underwriting tools, reducing approval times from weeks to hours.

- Healthcare: Hospitals ingest patient records, medical images, and diagnostic reports from multiple sources. AI systems standardize formats, extract critical details like medication histories, and prepare the data for analysis in treatment recommendation engines.

- Supply Chain Management: Logistics companies ingest data from delivery manifests, GPS tracking systems, and customer feedback. AI tools integrate this data to provide real-time updates on shipment statuses and predict delays.

What is Document Processing?

Document processing is the handling and manipulation of the raw data extracted during the document ingestion phase. While the document ingestion process deals primarily with bringing the data into the system, document processing is about interpreting, analyzing, and acting on it.

The process begins with extracting information from the ingested data, such as text, images, tables, or metadata. Then, it applies algorithms, often powered by AI, to categorize, classify, and enrich the extracted information. This can include identifying key phrases, understanding document structure, summarizing content, and even detecting anomalies or patterns that would otherwise be invisible to human eyes.

This way, processing a loan application, for example, might look like this:

- Data Extraction: Basic information like names, addresses, and income levels are extracted using technologies like Optical Character Recognition (OCR) or Natural Language Processing (NLP).

- Contextual Understanding: AI tools assess the meaning behind the data—whether the loan applicant’s salary is sufficient for approval or if there’s missing information in the application.

- Classification & Structuring: The system categorizes the document and organizes the extracted data into structured formats like databases or spreadsheets.

- Validation and Enrichment: After categorizing, AI might cross-reference the extracted data with external databases or previous customer interactions to enrich it.

With deep learning models, AI can understand intent, context, and nuances that would be difficult for a traditional rule-based system to comprehend. It has the ability to handle complex tasks, such as understanding partial or incomplete sentences, misspellings, or even mixed-language text.

One key development in this space is named entity recognition (NER), a form of NLP that identifies and classifies named entities (such as people, places, and dates) within documents. When applied to documents, NER can highlight key figures, timelines, and legal references, making it easier for businesses to assess contract terms, for example.

Another critical aspect of AI-based document processing is semantic understanding, which allows AI systems to go beyond pulling out individual pieces of information. For instance, AI doesn’t just extract the word “date” from a document—it understands whether the “date” refers to a due date, a creation date, or an expiration date.

Types of Data Sources

AI systems are designed to process a very wide spectrum of data, from highly structured databases to unstructured and messy real-world content:

Structured Data

Structured data refers to highly organized information that resides in fixed fields within a file or database. Examples include data from relational databases, spreadsheets, and CSV files. These are straightforward to process due to their clear structure, defined fields, and predictable layouts.

Unstructured Data

Unstructured data accounts for approximately 80-90% of all digital data, making it the most common and challenging type for document ingestion AI systems to handle. Unlike structured data, unstructured data lacks predefined organization or formatting, making it difficult to parse and analyze.

Common examples of unstructured data include:

- Text Files and PDFs: Documents such as contracts, invoices, and reports are often stored in text-heavy formats like PDFs. However, the text may not always be easily extractable, especially if the PDF is an image scan rather than a text-based document. Advanced OCR technology is often required to convert these documents into a machine-readable format.

- Emails and Chat Logs: Communications like customer service emails or internal company chat logs are goldmines of insights but notoriously difficult to process due to fragmented and conversational language. AI must interpret context, sentiment, and intent to make sense of this data.

- Social Media Content: Posts, tweets, and comments are highly unstructured and often combine text, images, and hashtags. Analyzing this data involves text extraction, sentiment analysis, and image recognition—all within the same dataset.

Semi-Structured Data

Semi-structured data sits between structured and unstructured data. It has some level of organization but doesn’t adhere to a strict schema like structured data. XML and JSON files, for example, are widely used for storing and transmitting semi-structured data.

Semi-structured data can be complex due to its nested nature. While AI can process this data more easily than fully unstructured sources, extracting meaningful insights often requires advanced parsing techniques and schema validation.

Multimedia Data

Advances in AI have also unlocked the ability to process multimedia data such as images, audio, and videos:

- Images: Scanned documents, photographs, and even handwritten notes fall under this category. AI-powered systems, equipped with OCR and computer vision technologies, can extract text and analyze visual patterns. For example, in healthcare, handwritten prescriptions can be scanned and digitized for further processing, with AI flagging dangerous drug interactions based on the extracted data.

- Audio: Voice recordings from customer support calls, meetings, or interviews are a rich data source. AI systems use automatic speech recognition (ASR) to transcribe audio into text, which can then be processed for insights. For example, in legal settings, AI can transcribe and analyze court hearings to identify critical arguments or patterns in testimony.

- Videos: Video content, such as surveillance footage or recorded training sessions, requires advanced AI models capable of extracting insights from both the visual and auditory components. For instance, retail companies may analyze surveillance videos to optimize store layouts by studying customer movement patterns.

Real-Time Data

Real-time data sources provide continuous streams of information, often requiring immediate processing. These include data from IoT devices, sensors, and live APIs.

For example, logistics companies rely on IoT-enabled tracking devices to monitor fleet locations and conditions. AI systems process this data in real-time to optimize delivery routes, monitor package conditions, or flag delays.

Legacy Data

Older systems may use proprietary formats, obscure coding, or physical storage media that modern software cannot easily access. Advanced AI systems equipped with specialized parsers and conversion tools can bridge this gap, however, to a somewhat limited extent.

See Best Use Cases of AI in Your Business

Methods of Data Ingestion Using AI

Each method of document ingestion is tailored to specific data types, sources, and use cases:

Batch Ingestion

Batch ingestion is the process of collecting and processing data in bulk at scheduled intervals. This approach is often used for static or less time-sensitive data, such as archival records, financial reports, or historical datasets.

How it Works:

- Data is extracted from multiple sources, such as databases, APIs, or file systems.

- AI models preprocess the data, cleaning it by removing duplicates, filling in missing values, and normalizing formats.

- The processed data is stored or forwarded to analytics tools for further use.

Real-Time Ingestion

Real-time ingestion is a necessity when time-sensitive data needs to be processed immediately.

How it Works:

- Data streams are captured continuously from sources like IoT sensors, transaction logs, or social media feeds.

- AI algorithms process this data on the fly, applying filters, transformations, and analyses in milliseconds.

- Insights are delivered to decision-makers or automated systems in near real-time.

Multimodal Ingestion

Modern systems increasingly deal with multimodal data—information that combines multiple formats such as text, images, and audio.

How it Works:

- AI models specialized for different modalities process each data type (e.g., natural language processing for text, computer vision for images).

- These outputs are unified into a single analytical pipeline, creating a comprehensive view of the dataset.

API-Based Ingestion

APIs (Application Programming Interfaces) are the backbone of many modern ingestion pipelines, so that various systems can communicate with each other.

How it Works:

- APIs pull data from platforms, such as SaaS tools, cloud storage, or external databases.

- AI manages the extraction process, ensuring that rate limits, authentication, and other constraints are adhered to without interruptions.

- Retrieved data is parsed and transformed into usable formats.

Event-Driven Ingestion

Event-driven ingestion is particularly useful in scenarios where data flow depends on specific triggers or conditions.

How it Works:

- Events (e.g., user interactions, file uploads, or sensor readings) act as triggers for data ingestion pipelines.

- AI evaluates the event’s context and determines how to process the incoming data.

File-Based Ingestion

Despite the shift to cloud-based systems, file-based ingestion remains relevant for businesses relying on traditional repositories like PDFs, Word documents, and spreadsheets.

How it Works:

- Files are uploaded or accessed from local storage or shared drives.

- AI tools preprocess the documents using techniques like OCR, entity recognition, and semantic analysis.

Database Ingestion

Many enterprises rely on databases as their primary data repositories. AI simplifies the process of ingesting data from structured databases by automating schema detection, data mapping, and query optimization.

How it Works:

- Data is queried from relational (e.g., MySQL, PostgreSQL) or non-relational (e.g., MongoDB, Cassandra) databases.

- AI systems analyze database schemas, predict the most efficient query paths, and retrieve the necessary information.

Web Scraping and Crawler-Based Ingestion

Web scraping involves extracting data from publicly available web pages, while crawlers are automated tools that navigate the web to collect information systematically.

How it Works:

- AI-based scrapers extract HTML content, interpret metadata, and identify relevant information.

- Natural language processing tools analyze extracted text for sentiment, intent, or thematic trends.

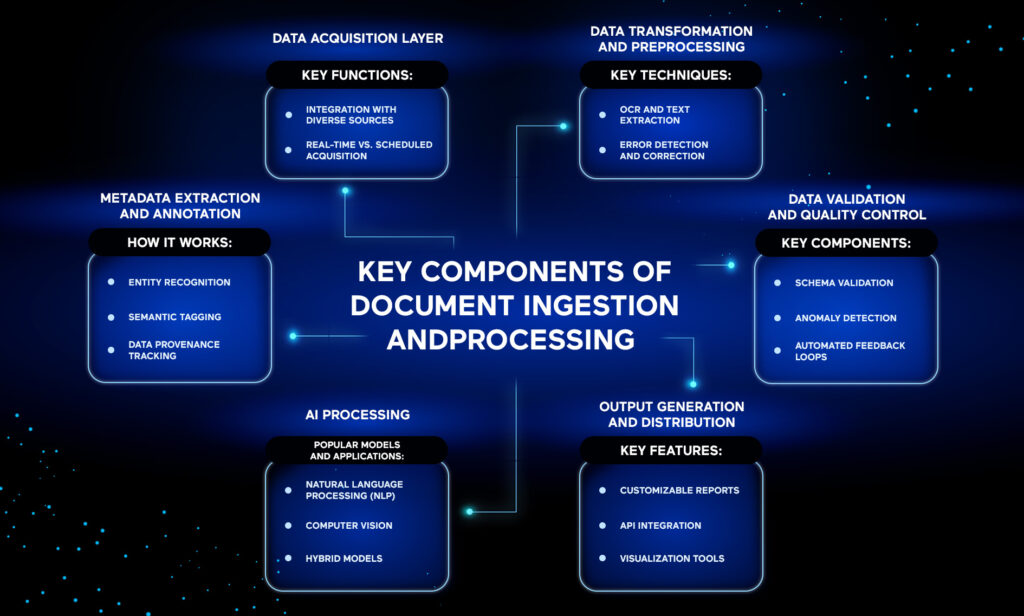

Key Components of Document Ingestion and Processing

Document ingestion and processing are complex systems built upon multiple layers of technologies and methodologies. Each layer plays a specific role, contributing to the extraction, transformation, and utilization of data.

Data Acquisition Layer

The first step in any AI document automation process is acquiring the data. This layer focuses on gathering content from diverse sources and formats.

Key Functions:

- Integration with Diverse Sources: This includes APIs, databases, file systems, IoT devices, and web platforms.

- Real-Time vs. Scheduled Acquisition: Some systems prioritize real-time data ingestion (e.g., for monitoring social media trends), while others rely on batch processing (e.g., for monthly financial reports).

Data Transformation and Preprocessing

Once data is acquired, it must be converted into a usable format. This stage involves cleaning, normalizing, and enriching raw data, which is often riddled with inconsistencies.

Key Techniques:

- OCR and Text Extraction: Optical Character Recognition (OCR) is pivotal for digitizing scanned documents, while AI enhances accuracy by interpreting low-quality images or complex scripts.

- Error Detection and Correction: AI models identify anomalies in datasets, such as duplicate entries or incomplete fields, and suggest or implement corrections automatically.

Metadata Extraction and Annotation

This component involves identifying and tagging data elements to enhance searchability and analysis.

How It Works:

- Entity Recognition: AI extracts key details, such as names, dates, and locations, from unstructured data.

- Semantic Tagging: Models like BERT and its successors classify data into categories, creating a structured representation.

- Data Provenance Tracking: Systems also help compliance through documenting the origin and transformation history of data.

Data Validation and Quality Control

The quality of processed data directly impacts decision-making and downstream applications. The validation layer ensures that only accurate and relevant information progresses through the pipeline.

Key Components:

- Schema Validation: AI models verify that data adheres to expected formats or structures, flagging inconsistencies for review.

- Anomaly Detection: By leveraging machine learning, systems identify outliers or unexpected patterns that may indicate data corruption.

- Automated Feedback Loops: Modern systems implement self-improving mechanisms, where errors are logged and used to retrain AI models for greater

AI Processing

AI models interpret and analyze data to generate actionable insights.

Popular Models and Applications:

- Natural Language Processing (NLP): Models like GPT-4 handle text summarization, sentiment analysis, and intent recognition.

- Computer Vision: Tools like Vision Transformers (ViTs) analyze visual data, such as scanned images or diagrams.

- Hybrid Models: Multimodal systems process combined inputs, such as documents containing both text and embedded visuals.

Output Generation and Distribution

After processing, the refined data must be presented in formats suitable for consumption by humans or downstream systems.

Key Features:

- Customizable Reports: AI tailors outputs to specific audiences, such as generating detailed analytics for data scientists and simplified summaries for executives.

- API Integration: Processed data is shared with other software systems for automation or further analysis.

- Visualization Tools: Interactive dashboards powered by AI highlight key metrics and trends.

Best Practices in Document Ingestion and Processing

Lastly, let’s explore a number of best practices aimed at optimizing the document ingestion process and minimizing any errors, among other things:

- Prioritize Source Diversity Without Sacrificing Consistency: AI systems are most effective when they can draw from diverse data sources—emails, PDFs, spreadsheets, web content, scanned documents, etc. Implement preprocessing routines that normalize data from multiple sources into a common structure, such as JSON or XML.

- Implement Robust Error Handling: Make use of AI-powered tools like isolation forests or autoencoders that can flag inconsistent or anomalous data, (e.g., mismatched date formats or invalid values).

- Utilize Metadata: Metadata enriches documents by embedding contextual information that helps advanced search. Use tools such as OpenAI’s Codex or Google’s T5 to automatically extract relevant metadata fields, such as author, topic, and key dates, from complex documents.

- Don’t Forget About Human Oversight: Establish workflows where AI handles repetitive tasks, and humans verify outputs for accuracy and context.

Conclusion

Despite its remarkable progress, AI document processing is not without its hurdles. Data inconsistency, bias in models, and evolving privacy regulations are still big concerns in 2025.

To address these, your focus must be on building robust, secure, and transparent systems. And you can achieve that with a solution like Wippy, as it will enable you to create an environment where AI agents can not only execute tasks but also reflect on their performance and adjust accordingly.

On top of that, if you are curious about how AI can help your business in other areas, feel free to consult with AI automation experts like Spiral Scout.