Software Testing Strategy for Demo Automation Software

CI/CD

Pipeline

Automated

Testing

AssertJ for Articulate

testing

Solutions

Industries

Technologies

About the Project

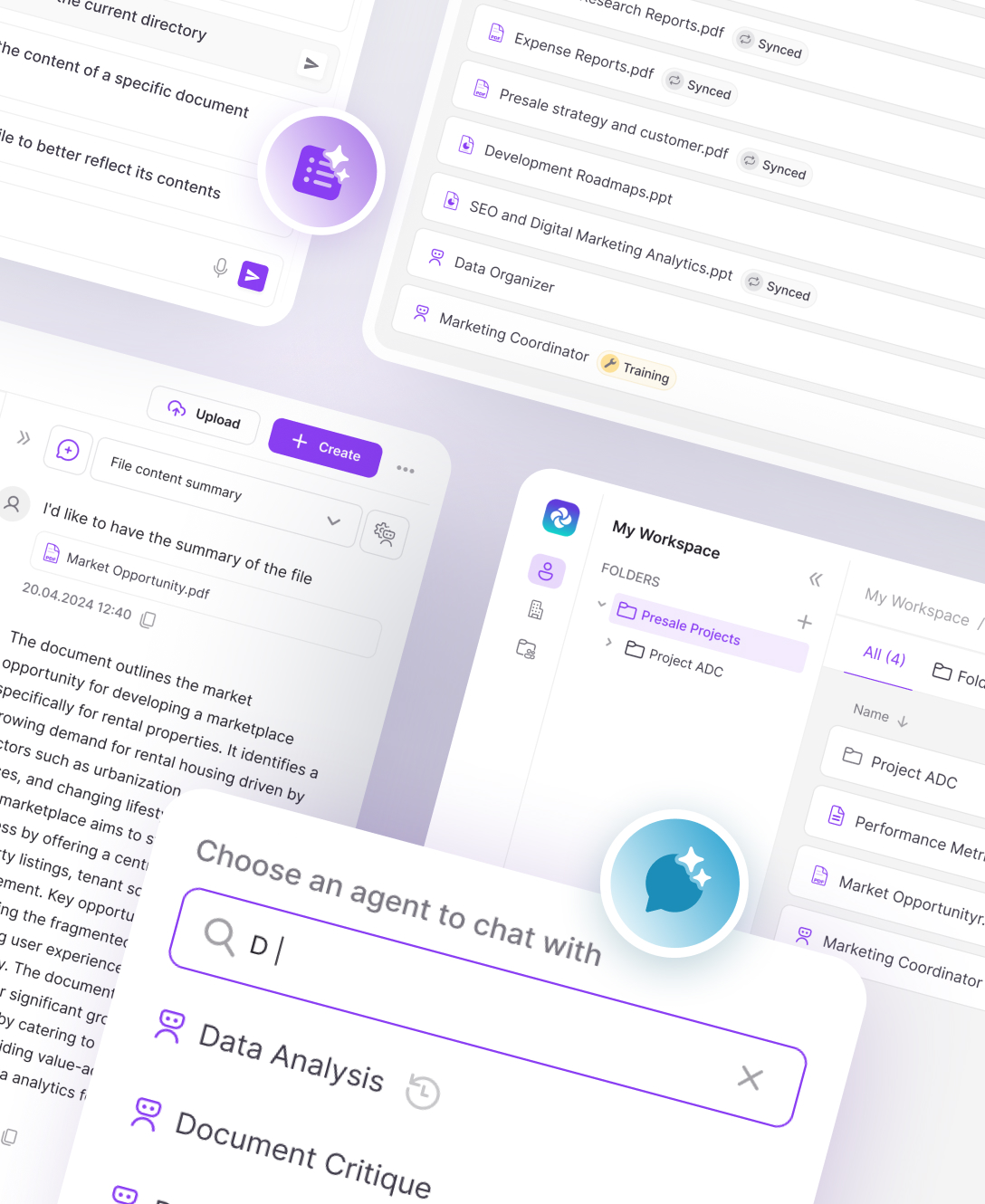

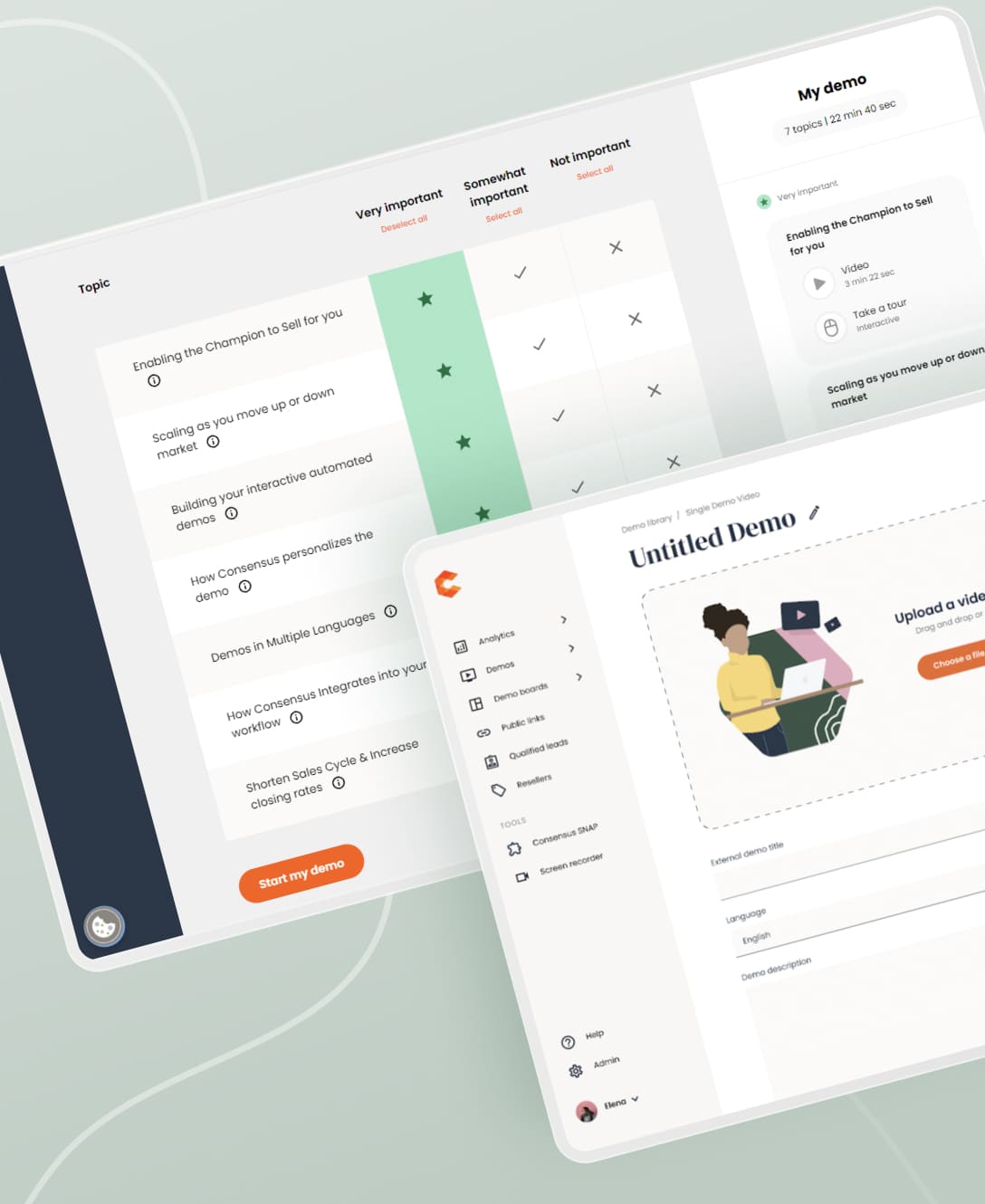

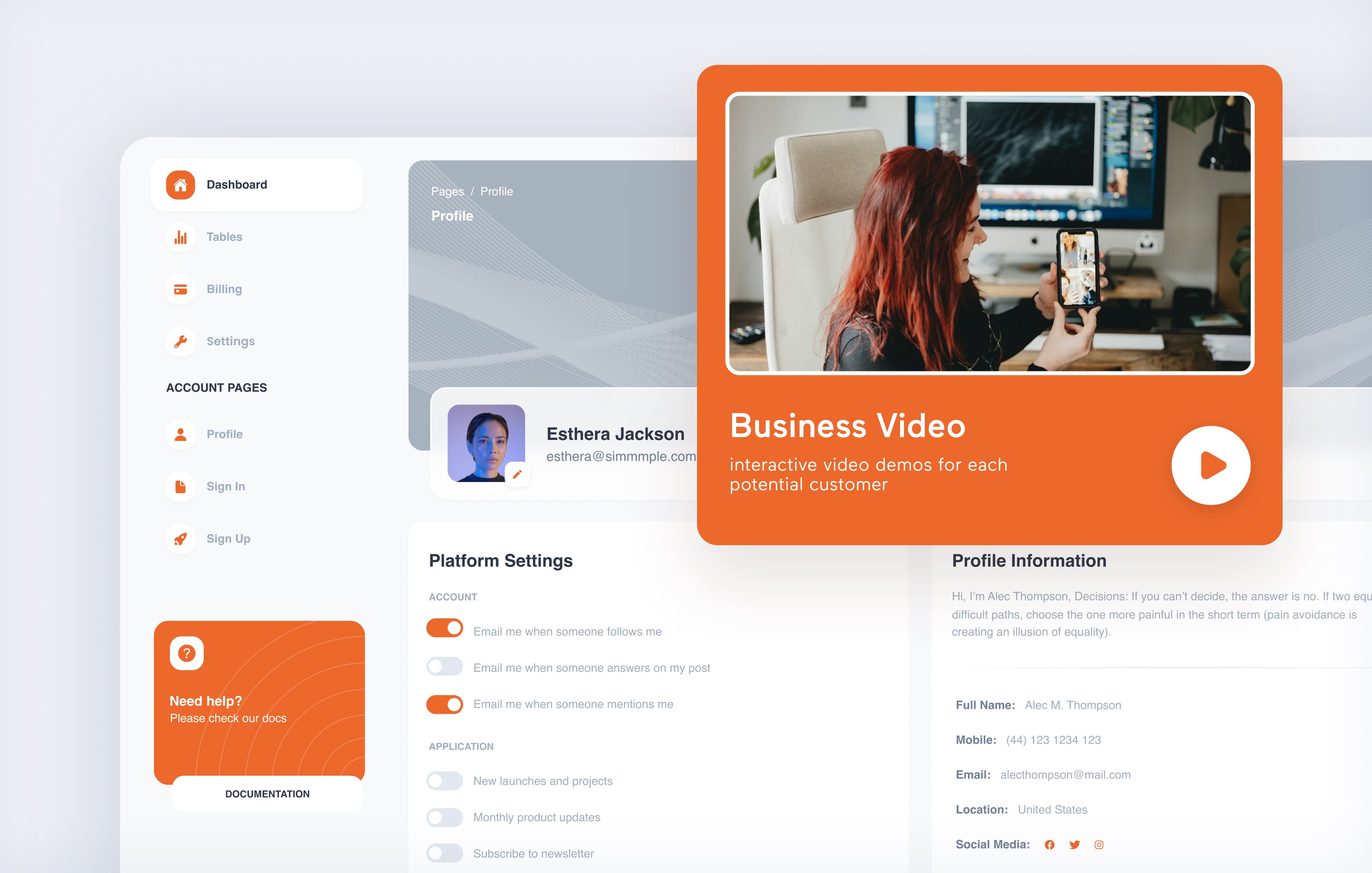

Our client, a leader in personalized video asset management, provides businesses with interactive, customized demos tailored to each potential customer’s interests. Their technology streamlines the buying journey by delivering content specific to each stakeholder, improving engagement and conversion rates.

To keep up with the demands of continuous feature updates and rapid deployments, the company required a scalable test automation framework that could eliminate regression issues, reduce reliance on manual testing, and seamlessly support their transition to a microservices-based architecture.

By implementing advanced automated testing strategies, integrating Wippy.ai for AI-driven test generation, and optimizing the CI/CD pipeline, Spiral Scout delivered a highly resilient testing infrastructure that significantly improved product stability and release efficiency.

Objectives

- Implement a robust test automation strategy to manage regression issues.

- Streamline the testing process and reduce the burden of manual testing.

- Ensure the transition to a microservices architecture is smooth and efficient.

- Build a resilient testing framework for future changes.

Challenges

Solutions

Regression Testing Was Slowing Releases

Before automation, every release required extensive manual regression testing, which delayed deployments and created bottlenecks in the development cycle.

Transition to Automation Testing

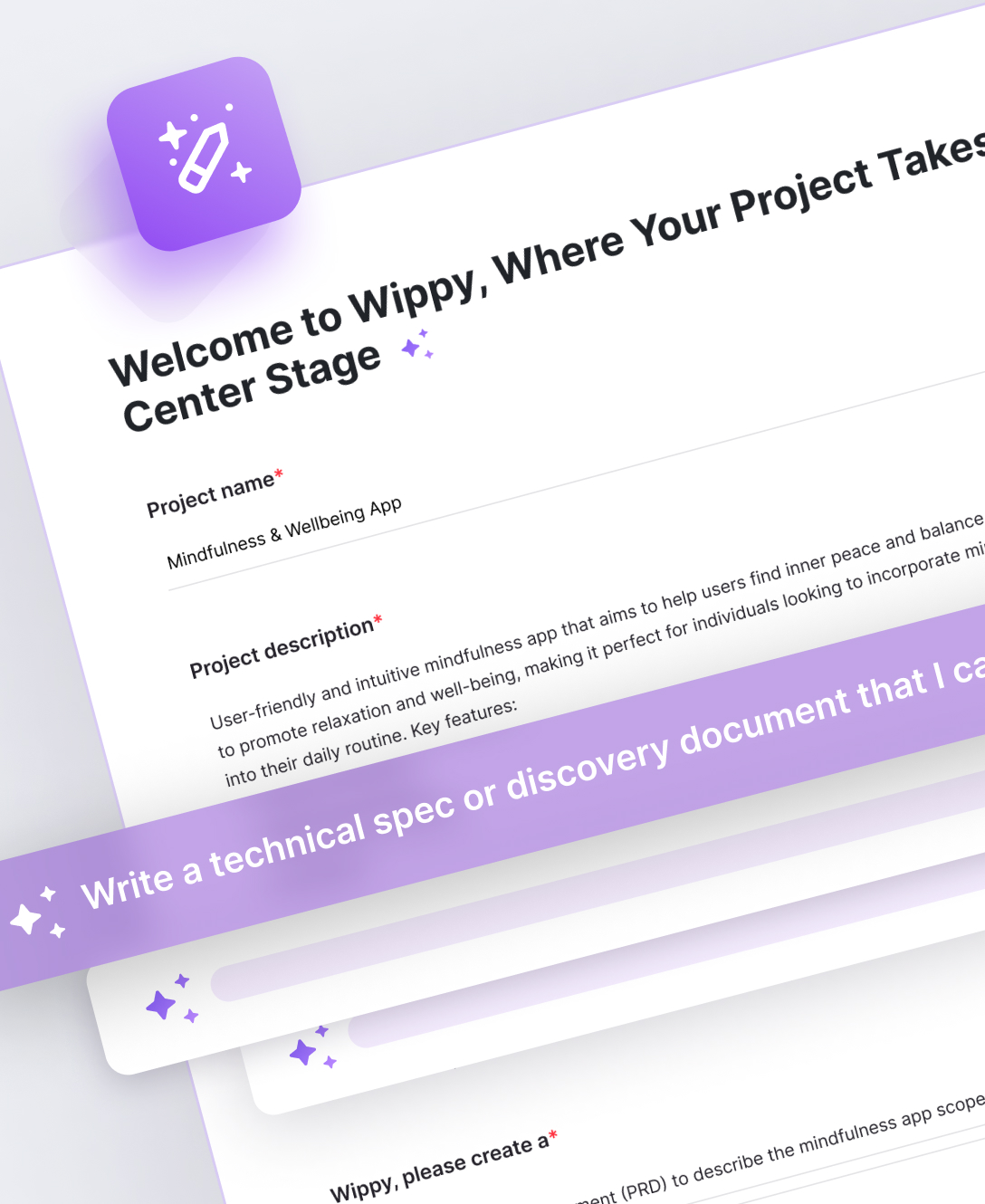

We implemented a robust automated testing framework covering both API and UI layers, reducing regression testing time from several days to just a few hours. AI-powered test generation using Wippy.ai helped create and optimize test cases, reducing developer and QA workload.

Lack of Scalability in Testing Strategy

With a growing user base and feature set, the manual QA process was no longer sustainable since the client wanted to keep their costs down. We knew after our initial research that expanding test coverage while maintaining efficiency was critical.

Scalable Automation Strategy

We designed a scalable test automation strategy that continuously adapts to codebase changes. The test suite dynamically expands using AI-driven scripting, allowing for rapid creation, modification, and execution of test cases across different environments.

Transitioning to a Microservices Architecture

We highly recommended to the client to migrate to a microservices-based infrastructure, which we knew would lead to some increases in complexities around service communication, API validation, and data consistency.

Build Micro-services

We built microservices-focused automated tests to validate API interactions, system stability, and integration points. Next we tested and implemented Wippy.ai’s AI-driven automation to generate and maintain API test scripts dynamically. Then we adopted service virtualization techniques to simulate dependencies and ensure consistent testing outcomes.

Ensuring Cross-Browser and Device Compatibility

With users accessing the demo platform across multiple browsers and devices, test coverage had to be expanded to guarantee a seamless experience.

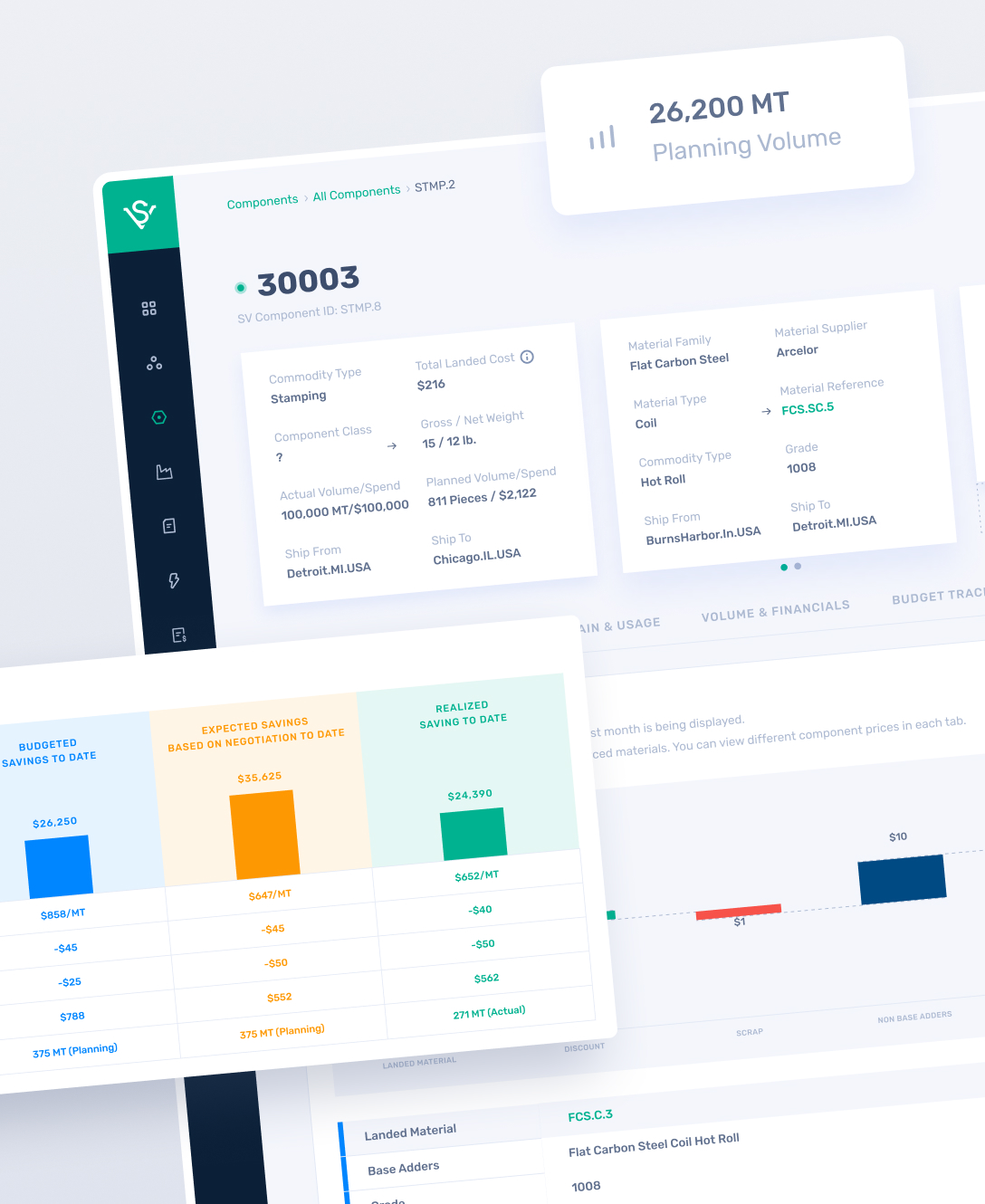

Data-Driven Insights with Allure TestOps

First we utilized Allure TestOps for centralized test management, providing data-driven insights and proper reporting mechanisms to monitor test results and trends. We developed a cross-browser testing framework using parallel execution, reducing testing time by 40%. We made sure thee was mobile and desktop compatibility across Chrome, Firefox, Safari, and Edge.

Automation testing strategy

Overview of the critical steps that shaped the project’s success and addressed its key testing challenges.

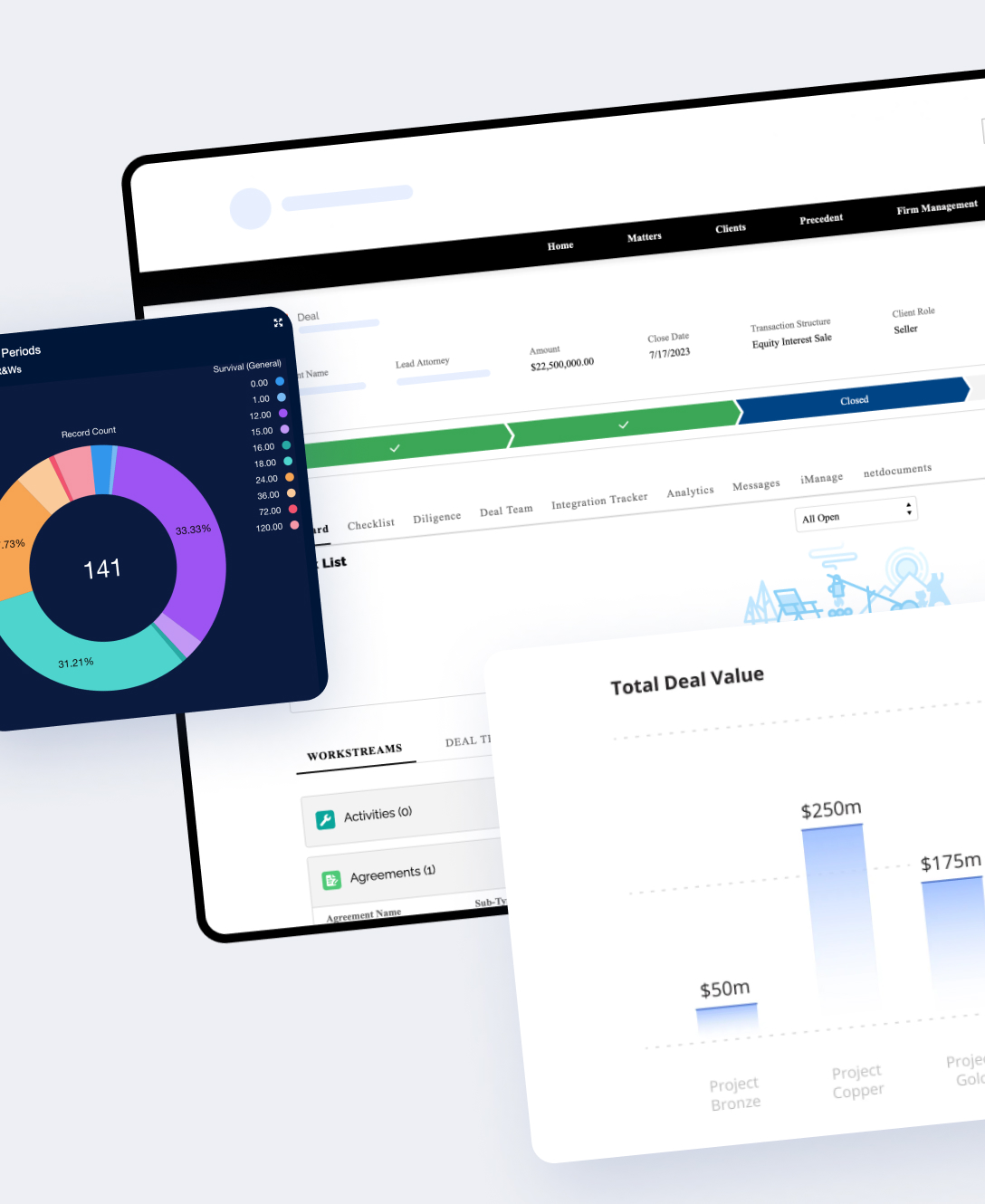

CI/CD Pipeline with Integrated Testing

Automated tests were seamlessly integrated into the CI/CD pipeline, blocking unstable builds from being deployed and reducing the risk of defects reaching production.

AI-Powered Test Generation with Wippy.ai

Leveraging Wippy.ai’s AI-driven automation, the system could auto-generate end-to-end tests, significantly reducing the manual effort required to maintain test coverage.

Empowered the Manual QA Team

Automation allowed the manual QA team to focus on detailed exploratory testing and managing new features.

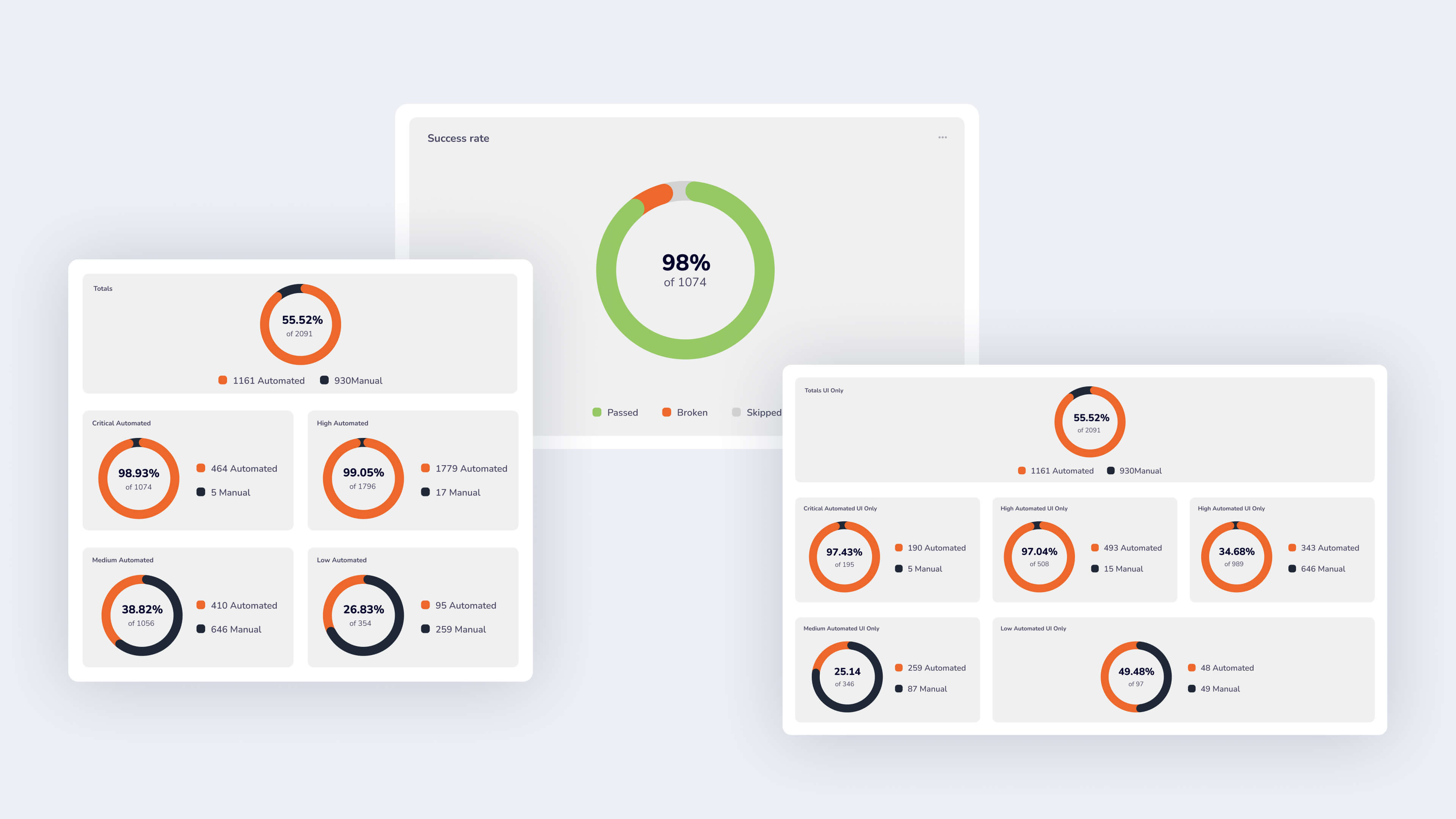

Project results

By combining AI-driven test automation, intelligent regression testing, and microservices-ready testing strategies, Spiral Scout transformed the client’s quality assurance process—making their demo platform more stable, scalable, and faster to deploy.

With Wippy.ai now capable of writing test cases, this case study serves as a blueprint for other SaaS companies looking to automate their testing strategies, reduce costs, and increase development velocity.

key outcomes

- 90% Reduction in Regression Testing Time

- Regression testing went from days to just a few hours, allowing for faster and more frequent releases.

- 40% Faster Cross-Browser & Mobile Testing

- 50% Reduction in QA Overhead

The AQA team’s role is not just testing, but also promoting a culture of quality. With strong automation, efficient regression, and a team-wide commitment to testing, we don’t just release the project, we launch it with confidence.

OVERALL SCORE

At Spiral Scout, we believe that when it comes to software development and delivery, it’s time for a change.

5.0

SCHEDULING

On Time / Deadline

5.0

QUALITY

Service & Deliverables

5.0

COST

Value / Within Estimates

5.0

NPS

Willing to Refer